https://mathwithbaddrawings.com/2017/11/29/epitaphs-in-the-graveyard-of-mathematics/

And a follow up –

https://gorelik.net/2017/12/02/epitaphs-in-the-graveyard-of-mathematics/

Category: Uncategorized

Fun Facts: Automatic Trivia Fact Extraction from Wikipedia

Authors: David Tsurel, Dan Pelleg, Ido Guy, Dafna Shahaf

Article can be found here

Trivia facts can drive users engagement, But what are trivia fact?

Is the fact “Barack Obama is part of the Obama family” a trivia fact?

Is the fact “Barack Obama is Grammy Award winner” a trivia fact?

This paper tackle the problem of automatically extracting trivia facts from Wikipedia.

In this paper Tsurel et al. focussed on exploiting Wikipedia categories structure (i.e. X is a Y). Categories represent set of articles with common theme such as “Epic films based on actual events”, “Capitals in Europe”, “Empirical laws”. An article can have several categories. The main motivation to use categories and not free text is that categories are cleaner than sentences and capture the essence of the sentence better.

According to Miriam-Webster dictionary a trivia is:

- unimportant facts or details

- facts about people, events, etc., that are not well-known

The first path Tsurel et al. tested was to look for a small categories a”presumably, a small category indicates a rare and unique property if an entity, and would be an interesting trivia fact”. This path proved to be too specific e.g “Muhammad Ali is an alumni of Central High School in Louisville, Kentucky”.

[TR] As commented in the paper this fact is is not a good trivia fact because the specific high school has no importance to the reader and or to Ali’s character. But, as stated later – when coming to personalizing trivia facts there maybe readers which find this fact interesting (e.g other alumni’s of this high school).

This led Tsurel et al. to the first required property of trivia fact – surprise.

Surprise

Surprise reflects how unusual the article with respect to the category. So they would like to define similarity matrix between article a and category C. A category is a set of articles therefore the similarity is defined as:

Surprise is defined as the inverse of the average similarity –

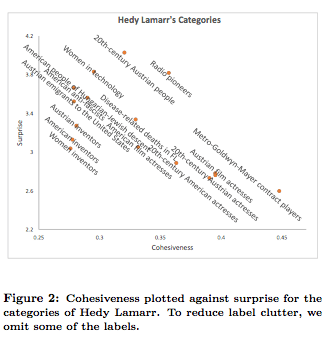

Example of results for this measure for Hedy Lamarr –

As you can see in the example above the surprise factor itself is not enough as it does not capture other aspects in Hedy Lamarr’s life (e.g. she invented radio encryption!).

Examining those categories and seeing that they are very spread led the team to the define the cohesiveness of category.

Cohesiveness

Cohesiveness of a category measures the similarity between items in the same category. Intuitively if an item is not similar to the other items in the category it might indicate that it is a trivia fact (or as mention later in the paper – detect anomalies).

Practically speaking the cohesiveness if category C is defined as the average similarity between each pair of articles in the category.

Hedy Lamarr’s results w.r.t to cohesiveness –

Tying it together

The trivia score of article a to category C is define as:

Interpret trivia score:

- Around one – this means that

. Meaning – the article is typical for the category, i.e. similar to other articles in the category.

- Much lower than one – “the article is more similar to other articles in the category than the average”. That means the article is a very good representative of the category.

- Higher than one – the article is not similar to the category, i.e is an “outsider” which make it a good trivia candidate.

Article similarity

Standard similarities methods don’t fit this case from 2 mains reasons –

- The authors look for broad similarity and not details similarities.

- Term frequency vector capture semantic similarity which sometimes get lost even after using normalization techniques.

Algorithm

- Describe each article by the top K TF-IDF in the text. The TF-IDF is trained on a sample of 10,000 wikipedia articles after stemming, stop-words removal and case folding. K=10 in their settings. The table below show the results for the articles “Sherlock Holmes”, “Dr. Watson” and “Hercule Poirot”. As one can see it captures the spirit of the things but there are not exact matches.

- To answer the exact match problem the authors used Word2Vec pre-trained model from Google News.

and

are the set of the top K TF-IDF term for articles

respectively.

- For each term in

find the most similar term in

based on Word2Vec pre-trained model (and vice versa) and sum those similarities.

where and

Further optimization on the computation such as caching, comparing only to subset of articles and parallel computation can be done when coming to implement this algorithm in production settings.

Evaluation

The authors evaluated their algorithm empirically against –

- Wikipedia Trivia Miner – “A ranking algorithm over wikipedia sentences which learns the notion of interstingness using domain-independent linguistic and entity based features.”

- Top Trivia – highest ranking category according to the paper algorithm.

- Middle-ranked Trivia – middle-of-the-pach ranked categories according to the paper algorithm.

- Bottom Trivia – lowest ranked categories according to the paper algorithm.

The authors crawled wikipedia and created a dataset of trivia facts for 109 articles. For each article they created a trivia fact for each algorithm. The textual format was “a is in the group C”.

Trivia Evaluation Study

Using the trivia facts above each fact was presented to 5 crown workers yielding 2180 evaluations.

The respondents were asked to agree \ disagree the facts according to the following statements or note that they don’t understand the fact:

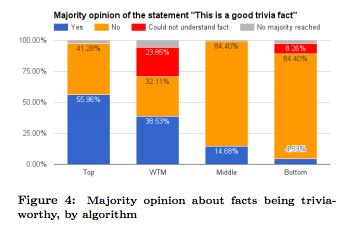

- Trivia worthiness – “This is a good trivia fact”.

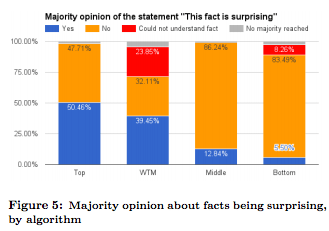

- Surprise – “This fact is surprising”

- Personal knowledge – “I knew this fact before reading it here”

The score of a fact was determined by the majority vote.

Result –

- The top trivia facts are significantly better than the WTM facts

- The consensus on the trivia worthiness of the top facts compared to the WTM facts is higher (32.8% vs 11.9%).

Engagement Study

In this part the team used google ad to tie trivia facts to searches and analyzed the bounce rate and time on page for the collected clicks (almost 500).

Results –

- CTR was not significantly different than reported results in the market (0.8) – i.e does not indicate willingness of users to explore trivia facts.

- Bounce rate (time of page < 5 seconds) for bottom trivia was 52%, for WTM facts 47% and for top trivia 37%.

- Average time on page was significantly better in top trivia comparing to bottom trivia (48.5 seconds vs 30.7) but was not significant comparing to WTM (43.1 seconds).

- One reason for people to spend time on WTM fact pages was because the presented sentences ware longer than the sentences presented for the top trivia and had a higher change of being confusing so people take time to understand them.

Discussion and Further work

Limitation – the algorithm works well for human entities but worse on other domain such as movies and cities.

Future work –

- Better phrasing of the trivia facts – instead of “X is a member of group Y” —> “Obama won Best Spoken word Album Grammy Awards for abridged audiobook versions of Dreams from My Father in February 2006 and for the Audacity of Hope in February 2008”.

- Turning trivia facts to trivia questions – for the example above generate a question of the form – “Which US presider is a Grammy award winner?” And not “Who won a Grammy award” or “What did Barack Obama win?”

[TR] – this would require additional notation of a good trivia question. The “good” question in this example is interesting since it involves a contrast between two categories.

Other applications –

- Anomaly detection – surprising facts are sometimes surprising because they are wrong. Using this algorithm we can clean those and improve Wikipedia reliability.

- Predict most surprising article in a given category

- Improve search experience by enriching result with trivia facts

[TR] – Improve learning experience on learning platforms by enriching the UI with trivia facts.

Extensions –

- Personalized trivia score – as commented above, different reader can find different facts more \ less interesting (see here) so the score should be personalized and take into account different properties of the reader such as demographic and even more temporal like mood.

- [TR] – Additional extensions involve trivia facts between entities such as “Michelle Obama and Melania Trump are in the same height”, “X and Y were born in the same date”.

Map Spark UDAF (Java)

I run Spark code on Java. I had data with the following schema –

root |-- userId: string (nullable = true</span> |-- dt: string (nullable = true)</span> |-- result: map (nullable = true)</span> | |-- key: string | |-- value: long (valueContainsNull = true)

And I wanted to get a single record for a user which has the following schema –

root |-- userId: string (nullable = true)</span> |-- result: map (nullable = true) | |-- key: string | |-- value: map (valueContainsNull = true) | | |-- key: string | | |-- value: long (valueContainsNull = true)

Attached the user defined aggregation function I wrote to achieve it. Before that –

MergeMapUDAF mergeMapUDAF = new MergeMapUDAF();

df.groupBy("userId").agg(mergeMapUDAF.apply(df.col("dt"), df.col("result")).as("result"));

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| package com.tomron; | |

| import org.apache.spark.sql.Row; | |

| import org.apache.spark.sql.expressions.MutableAggregationBuffer; | |

| import org.apache.spark.sql.expressions.UserDefinedAggregateFunction; | |

| import org.apache.spark.sql.types.DataType; | |

| import org.apache.spark.sql.types.DataTypes; | |

| import org.apache.spark.sql.types.StructField; | |

| import org.apache.spark.sql.types.StructType; | |

| import java.util.ArrayList; | |

| import java.util.HashMap; | |

| import java.util.List; | |

| import java.util.Map; | |

| /** | |

| * Created by tomron on 7/10/17. | |

| */ | |

| public class MergeMapUDAF extends UserDefinedAggregateFunction { | |

| private StructType _inputDataType; | |

| private StructType _bufferSchema; | |

| private DataType _returnDataType; | |

| private static DataType _valueType = DataTypes.LongType; | |

| private static DataType _innerKeyType = DataTypes.StringType; | |

| private static DataType _outerKeyType = DataTypes.StringType; | |

| private static DataType _innerMap = DataTypes.createMapType(_innerKeyType, _valueType); | |

| private static DataType _outerMap = DataTypes.createMapType(_outerKeyType, _innerMap); | |

| public MergeMapUDAF() { | |

| List<StructField> inputFields = new ArrayList<>(); | |

| inputFields.add(DataTypes.createStructField("key", _outerKeyType, true)); | |

| inputFields.add(DataTypes.createStructField("values", _innerMap, true)); | |

| _inputDataType = DataTypes.createStructType(inputFields); | |

| List<StructField> bufferFields = new ArrayList<>(); | |

| bufferFields.add(DataTypes.createStructField("data", _outerMap, true)); | |

| _bufferSchema = DataTypes.createStructType(bufferFields); | |

| _returnDataType = _outerMap; | |

| } | |

| @Override | |

| public StructType inputSchema() { | |

| return _inputDataType; | |

| } | |

| @Override | |

| public StructType bufferSchema() { | |

| return _bufferSchema; | |

| } | |

| @Override | |

| public DataType dataType() { | |

| return _returnDataType; | |

| } | |

| @Override | |

| public boolean deterministic() { | |

| return false; | |

| } | |

| @Override | |

| public void initialize(MutableAggregationBuffer buffer) { | |

| buffer.update(0, new HashMap<String, Map<String, Long>>()); | |

| } | |

| @Override | |

| public void update(MutableAggregationBuffer buffer, Row input) { | |

| if (!input.isNullAt(0)) { | |

| String inputKey = input.getString(0); | |

| Map<String, Long> inputValues = input.<String, Long>getJavaMap(1); | |

| Map<String, Map<String, Long>> newData = new HashMap<>(); | |

| if (!buffer.isNullAt(0)) { | |

| Map<String, Map<String, Long>> currData = buffer.<String, Map<String, Long>>getJavaMap(0); | |

| newData.putAll(currData); | |

| } | |

| newData.put(inputKey, inputValues); | |

| buffer.update(0, newData); | |

| } | |

| } | |

| @Override | |

| public void merge(MutableAggregationBuffer buffer1, Row buffer2) { | |

| Map<String, Map<String, Long>> data1 = buffer1.<String, Map<String, Long>>getJavaMap(0); | |

| Map<String, Map<String, Long>> data2 = buffer2.<String, Map<String, Long>>getJavaMap(0); | |

| Map<String, Map<String, Long>> newData = new HashMap<>(); | |

| newData.putAll(data1); | |

| newData.putAll(data2); | |

| buffer1.update(0, newData); | |

| } | |

| @Override | |

| public Object evaluate(Row buffer) { | |

| return buffer.<String, Map<String, Long>>getJavaMap(0); | |

| } | |

| } |

Code Challenges Anti-Patterns

Code challenges are a common tool to evaluate candidate ability to develop software. Of course there are other indicators such as – blog posting, open source involvement, github repository, personal recommendations, etc. Yet, code challenges are frequently used.

I recently got to check some code challenges and was surprised from some of the things I found there. Here are my anti-patterns to code challenges –

Call a file \ process on your local machine

for line in open('/Users/user/code/data.csv'):

print ('No, No, No!')

The person how check your code challenge cannot just run the code since she will get a file not found error or similar and will have to find where you call the file and why.

If you need to call some resource (file, database, etc.) you can pass it as a command line argument, put it in a config file, use environment variable.

Reinvent the wheel

Write everything by yourself. Why you crowd wisdom or mature project which are already debugged and tested when you can write everything by yourself the way you like it with your own unique bugs?

Unless you were told otherwise many times there is already a package \ library \ API \ design pattern which is doing part of what you need. E.g if you need to fetch data from Twitter there is Twitter API and there Twitter clients in different languages. You definitely don’t need to crawl twitter and process the HTML.

Don’t write a README file

No need to write a README file. Whoever is reading your code is a professional in the tech stack you chose and will immediately know how to start your project, which dependencies are there, which environment variables are needed, etc.

The goal of README in this context is to explain how to run the code, what is inside the package and further considerations \ assumptions \ choices you did while working on this challenge.

A detailed README is always priceless and specially in this context when you don’t always have a direct communication with the candidate. System diagram \ architecture chart is also recommended when relevant.

If you have further notes such as ideas on how to expand this system, what would you do next, etc. I would put it in IDEAS files (e.g. IDEAS.md) and also link the README from it.

Don’t write tests

This is actually the part which highlight your genius. You don’t need to test your code because it is perfect.

Seriously, testing plays a big role in software development and making sure the code you wrote work as expected. As a viewer from the side it also give me a clue how pedant you are and how much you care about the quality of your work. This is my first impression of your work, don’t make it your last.

ZIP you code

This is how we deploy and manage versions in our company – I just send my boss a zip file, preferably via slack.

I expect to get a link to a git repository (if you want to be cautious you can use private repos e.g. by bitbucket). In this link I can see the progress you made while working on the challenge, your commit messages (also a signal on how pedant you are). As a candidate you also get to demonstrate your skills in version control system in addition to your coding skills.

Wrap Up

There are of course many other things I can point to such as general software development practices – magic numbers, meaningful names, spaghetti code, etc. But as said – those are general software development skills that one should use everyday, the anti-patterns stated above are IMO specially important in the case of code challenges.

SO end of year surveys

Recently Stack Overflow published few posts comparing the usage of Stack Overflow between different segments \ scenarios:

- How Do Students Use Stack Overflow?

- What Programming Languages Are Used Most on Weekends?

- Women in the 2016 Stack Overflow Survey

Few comments regarding those posts –

How Do Students Use Stack Overflow?

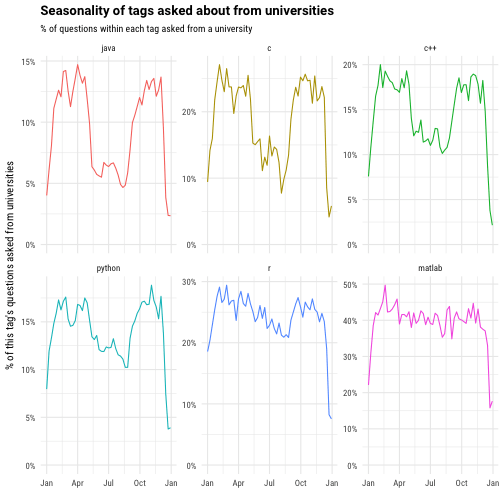

- “R and MATLAB are pretty consistent throughout the year” – those are research related programming languages and it make sense that student to advanced degrees use them all around the year. Python is also used a lot in research and one can see that the decrease during the the summer vacation is smaller than the decrease in Java and C.

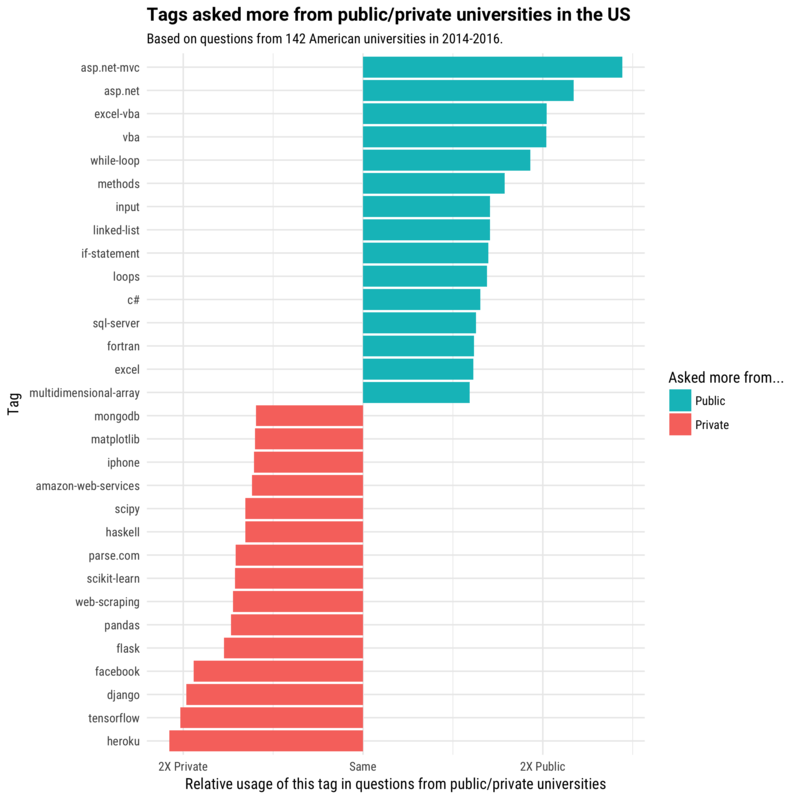

- Public universities vs Private universities – the list of the most common tags in the public universities include beside Microsoft products also – while-loop, methods, input, linked-list, if-statement, loops, etc. Those are all theoretical \ foundation related concepts. On the other hand the private list contain not theoretical concepts beside web-scraping, but stack specific products, e.g – mongodb, iphone, aws, flask, heroku, etc. For me that flags either lecturers on private universities explain better theoretical concepts and therefore the students don’t ask questions about them or that private universities invest more on preparing the students to the labor market and therefore use more industry products.

- This post end with a chart of the Universities that asked the most R questions relative to other technologies – “The most R-heavy schools … and some are recognizable as having prominent statistics programs”. This brings me to another analysis I would like to see – what are the majors of the students who asked those questions?

- And of course segmenting it together with the other analysis – e.g. how female students uses stackoverflow versus of male students use it. In which universities students work hardest in the weekends?

What Programming Languages Are Used Most on Weekends?

- Which tag’s weekend activity has decreased the most? As SO suggest the usage of some of the technologies decreased on weekends because the usage of those technologies decreased on general such as SVN and probably RoR3 and visual-studio-2012. On the other hands, other technologies such as Scala and maybe Azure become more popular and mainstream so it is no longer only geeks technologies used in weekends. To confirm this claim it would be interesting to see if the currently increased weekend technologies (e.g. android-fragments, android-layout, unity3d, etc.) will become mainstream in few years.

- It is also interesting to see if the weekend technologies are also technologies used at after work hours (this is of course timezone dependent).

- Also interesting to see the difficulty levels of weekend posts versus workdays posts. Does users use weekend to learn new technologies and therefore ask relative basic questions or they dive in to a technology and ask advanced questions. The difficulty of the questions can be evaluated by the time it took the get answer (more complex question -> longer time to get answer), up-votes, etc.

Women in the 2016 Stack Overflow Survey

- Those surveys are biased to begin with. Having said that, the gap between people who identify ask female and people who identify as male who answered cries out loud. It is even bigger than the gap in the labor market. Where does this gap comes from? Maybe women are just better in search as many of the SO questions are duplicated 😉

- This is noted also in the post itself. It is also interesting to see which other populations were under represented in the survey.

- An interesting analysis I would like to see is the sentiment (aggressiveness vs calm \ informative in the answers) segmented by the repliers gender. Maybe also as a function of the asker gender. I must say the personally never noticed the asker’s gender.

Davies-Bouldin Index

TL;DR – Yet another clustering evaluation metric

Davies-Bouldin index was suggested by David L. Davies and Donald W. Bouldin in “A Cluster Separation Measure” (IEEE Transactions on Pattern Analysis and Machine Intelligence. PAMI-1 (2): 224–227. doi:10.1109/TPAMI.1979.4766909, full pdf)

Just like Silhouette score, Calinski-Harabasz index and Dunn index, Davies-Bouldin index provide an internal evaluation schema. I.e. the score is based on the cluster itself and not on external knowledge such as labels.

The Silhouette score reflects how similar a point is to the cluster it is associated with. I.e .for each point with compute the average distance of the point from the points in the nearest cluster minus the average distance of the point from the points in its own cluster divided by the maximum between those distances. The overall score is the average of the score per point. The Silhouette score is bounded from -1 to 1 and higher score means more distinct clusters.

The Calinski-Harabasz index compares the variance between-clusters to the variance within each cluster. This measure is much simpler to calculate then the Silhouette score however it is not bounded. The higher the score the better the separation is.

The intuition behind Davies-Bouldin index is the ratio between the within cluster distances and the between cluster distances and computing the average overall the clusters. It is therefore relatively simple to compute, bounded – 0 to 1, lower score is better. However, since it measures the distance between clusters’ centroids it is restricted to using Euclidean distance function.

Silhouette score and Calinski-Harabasz index were previously implemented and are part of scikit-learn and I implemented Davies-Bouldin index. Hopefully this will be my first contribution to scikit-learn, my implementation is here.

5 Berlin Data Native 2016 Highlights

- Super Mario from Microsoft (Daniel Molnar) – Data Janitor 101, one of the best reasoned talks I heard for a long time.

- Andrew Clegg, data scientist @ Etsy gave an historic review on Semantic Similarity and Taxonomic Distance and how it is used in Etsy. Slides are here.

- Topic Modeling on Github repositories – presented by Vadim Markovtsev from source{d}. Why is that interesting? Few possible evolutions – code quality, natural language to code. Also interesting direction to have a look on – bigartm.

- Data Thinker – Is it a new role? Is it just a buzzword? Klaas Bollhoefer from the Unbelievable Machine presented their ideas.

- Databases – The Choice is Yours – Philipp Krenn from Elastic presented his view on the databases field.

Data Natives Berlin 2016 (1st day)

Detecting Data Errors: Where are we and what needs to be done?

My summary and notes for “Detecting Data Errors: Where are we and what needs to be done?” by Ziawasch Abedjan, Xu Chu, Dong Deng, Raul Castro Fernandez, Ihab F. Ilyas, Mourad Ouzzani, Paolo Papotti, Michael Stonebraker, Nan Tang Proceedings of the VLDB Endowment 9.12 (2016): 993-1004.

Paper can be found – here

In this paper the group of researchers evaluate several data cleaning tools to detect different types of data errors and suggest a strategy to holistically run multiple tools to optimize the detection efforts. This study focus on automatically detecting the errors and not repair them since automatically repairing is rarely allowed.

Current status – current data cleaning solutions are usually belong to one or more of the following categories:

- Rules based detection algorithms – the user specify set of rules such as: not null, functional dependencies, user defined function that the data must obey and the data cleaner find any violation. Example: NADEEF.

- Pattern enforcement and transformation tools – tools in this category discover either syntactic or semantic patterns in the data and detect those errors. Example:OpenRefine, Data Wrangler, DataXFormer, Trifacta, Katara.

- Quantitative error detection algorithms – find outliers and glitches in the data.

- Record linkage and de-depulication algorithms – identify data which refer to the same entity and is not consistent \ appear multiple times. Examples: Data Tamer, TAMR.

Evaluation of tools

- Precision and recall of each tool

- Errors detected when applying all the tools together

- How many false positives are detected as we would like to minimize the human effort.

Error types

- Outliers include data values that deviate from the distribution of values in a column of a table.

- Duplicates are distinct records that refer to the same real-world entity. If attribute values do not match, this could signify an error.

- Rule violations refer to values that violate any kind of integrity constraints, such as Not Null constraints and Uniqueness constraints.

- Pattern violations refer to values that violate syntactic and semantic constraints, such as alignment, for matting, misspelling and semantic data types.

(p. 995)

Some errors overlap and fit into more than one category.

[TR] – are those all the error types which exist? What about correlated errors between several records?

Data sets

[TR] – there are many data sets specific details in the paper. As I am more interested in the ideas those details will be omitted here.

[TR] – the data evaluated relatively small datasets with small number of columns. It would also be interesting to evaluate it bigger and more complex datasets, e.g. wikidata.

[TR]- consider the temporal dimension of the data. I.e some properties may have expiration date which other not (e.g birth place never changes while current location changes).

Data cleaning tools

| DBoost | DC-Clean | OpenRefine | Traficata | Pentaho | Knime | Katara | TAMR | |

| Pattern violation | + | + | + | + | + | |||

| Constraint violations | + | |||||||

| Outliers | + | |||||||

| Duplicates | + |

- DBoost – use 3 common method for outlier detection – histograms, Gaussian and multivariate Gaussian mixtures. The UVP of this tool is decomposing types into their building blocks. For example expanding dates into day, month and year. DBoost require configuration such as number of bins and their width for histograms and mean and standard deviation for Gaussian and GMM.

- DC-Clean – focus on denial constraints and subsume the majority of the commonly used constraint languages. The collect if denial constraints was designed for each data set.

- OpenRefine – can digest data in multiple formats. Data exploration is performed through faceting and filtering operations ([TR] – reminds DBoost histograms)

- Trifacta – commercial product which was developed from DataWrangler. Can predict and apply syntactic data transformation for data preparation and data cleaning. Transformations can also involve business logic.

- Katara – uses external knowledge bases, e.g. Yago in order to detect errors that violate a semantic pattern. It does it by first identifying the type of the column and the relations between two columns in the data set using a knowledge base.

[TR] – assumes that the knowledge base is ground truth. We need to doubt this as well.

- Pentaho – provide graphical interface for data wrangling and can orchestrate ETL processes.

- KNIME – focuses on workflow authoring and encapsulating data processing tasks with some machine learning capabilities.

- TAMR – uses machine learning models to learn duplicate features through expert sourcing and similarity metrics.

Combination of Multiple tools

- Union all and Min-K –

- Union all – takes the union of the errors emitted by all tools (i.e k=1)

- Min-k – error detected by at least k tools.

- Ordering based on Precision

- Cost model –

- C – cost of having a human check a detected error

- V – Value of identifying a real error (V > C otherwise make not sense).

- P – Number of true positives

- N – Number of false positives

Total value should hold – P * V > (P +N) * C => P/(P+N> > C/V. P/(P+N) is the precision. Therefore if the model precision is less than C/V we should not run it. Model precision can be evaluated by sampling the detected errors.

“We observed that some tools are not worth evaluating even if their precision is higher than the threshold, since the errors they detect may be covered by other tools with higher estimate precision (which would have been run earlier).” (p. 998)

[TR] – not always the cost and value can be estimated correctly and easily and not all the errors have the same cost and value. - Maximum entropy-based order selection – the algorithm estimate the overlap between the tool results and picks the tool with the highest precision to reduce the entropy. Algorithm steps:

- Run individual tool – run each tool and get the detected errors.

- Estimate precision for each tool by checking samples

- Pick a tool which maximize the entropy among the unused tools so far – picks the one with the highest estimated precision on the sample and verifies its detected errors on the complete data that have not been verified before.

- Update – update the errors that were detected by the chosen tool in the last step and repeat last two steps.

- Cost model –

- Using Domain specific tools – for example AddressCleaner. [TR] – only relevant when such a tool exists and is easy \ cheap to use.

- Enrichment – rule-based systems and duplicate detection strategies can benefit from additional data. Future work would consider data enrichment system.

- No clear winner – different tools worked well on different data set mainly due to different error types distribution. Therefore a holistic strategy must be used.

- Since there are errors which overlaps one can order the tools to minimize false positives. However the ordering strategy is data set specific.

- Yet, not 100% of the errors are detected. Suggested way to improve it is –

- Type-specific cleaning – for example date cleaning tools, address cleaning tools etc. Even those tools are limited in their recall.

- Enrichment of the data from external sources

- A holistic combination of tools – algorithms for combining tools. [TR] – reminds ensemble methods from Machine learning.

- Data enrichment system – adding relevant knowledge and context to the data set.

- Interactive dashboard

- Reasoning on real-world data

Discussion and Improvements

Conclusions

Future work